Apple Intelligence: These functions are available at the launch

It should be private, but above all easy to use: a first look at the functions of Apple Intelligence that are to come in iOS 18.

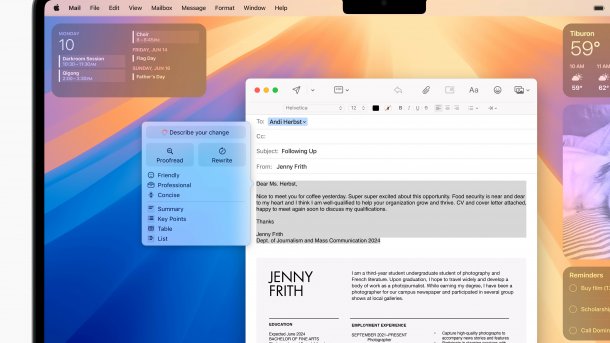

With Apple Intelligence, Apple integrates writing aids into its operating systems, which can be used to proofread emails or improve wording, for example.

(Image: Apple)

When Apple launches Apple Intelligence in the fall, initially in English and later in other languages, the handling of artificial intelligence should be secure, but above all simple. At the WWDC developer conference, heise online was able to see just how easy it is using the example of several functions that are not yet included in the first developer beta of iOS 18 that is currently available. It is still unclear when the features currently under development will be available for free testing. A few more details have also become known that did not emerge from the opening keynote at the WWDC developer conference.

Apple's approach to working with images is most obvious. With the function called "Image Wand", a line drawing or even just an existing written text in the Notes app is enough to create a matching image. Because this is apparently also intended to have a magical effect, the associated animation is reminiscent of the glimmer seen in films about magicians. The "magic wand" here is the Apple Pencil, which is used, for example, to circle an existing drawing and trigger the function via the menu. The generative AI developed by Apple itself proved to be very accurate when converting a drawn cake or creating a picture for instructions for a dog kennel – although the line drawing of the cake was already pretty good. It remains to be seen how well the AI will also understand artists who are not so adept at sketching.

Clean up images at the tap of a finger

The "Clean-Up" function in the Photos app works in a similarly simple way. The man running into the picture in the group photo in front of a beautiful scene in the background is identified by the AI itself as a troublemaker and marked with a shimmering light. All it takes is a tap with one finger to make him disappear. The AI generates the appropriate background to complete the picture. For other motifs, the user has to take action themselves - but here, circling with a finger is enough to retouch out two people walking past in the foreground in a long shot of a park, for example. A special feature here is that the software does not allow the main subject to be removed. Whether this is due to concerns that the images could be used for mischief or whether it could lead to poor quality results remains to be seen - presumably both play a role. Of course, it will also be necessary to check exactly how credible the background really is when the functions are published.

Videos by heise

(Image: Apple)

Apple Intelligence will initially be launched in the fall on three platforms and in US English: on the iPhone (iOS), the iPad (iPadOS) and the Mac (macOS). In the car, in CarPlay, there will at least be the modified voice recognition called Siri with its shimmering new look at the edge of the screen and some functions, as CarPlay is basically just an interface to the iPhone. The Apple Watch, which is more independent thanks to watchOS, and the HomePods with their firmware will be left empty-handed for now, despite certain connection points to the iPhone. This is unfortunate for owners, as devices with a very small screen or no screen at all would benefit significantly from the more human Siri.

Can also be used in flight mode

However, Apple needs a powerful processor for the magic of Intelligence, which the small devices in particular do not have. This is why Apple Intelligence will only be available on the iPhone 15 Pro, the iPhone 15 Pro Macs and all Macs and iPads with at least an M1 chip. The good news is that thanks to local processing (on-device processing), Apple AI will still be available on these devices often even when there is no internet connection or in flight mode. However, depending on the function, there may be limitations, namely when the AI wants to hand over tasks to Apple's more powerful AI servers in the cloud.

Nobody knows exactly when this will happen. Apple obviously doesn't want to annoy users with such details - of course, experts are itching to find out this secret from the device. Only when the Apple AI models have no answer whatsoever and Siri suggests passing the request on to ChatGPT from OpenAI via a dialog box does it become obvious that the answer is in the cloud. This is particularly the case if, for example, a wholly new text is to be created out of nothing or if a very long document is to be examined for a specific point.

Empfohlener redaktioneller Inhalt

Mit Ihrer Zustimmung wird hier ein externes YouTube-Video (Google Ireland Limited) geladen.

Ich bin damit einverstanden, dass mir externe Inhalte angezeigt werden. Damit können personenbezogene Daten an Drittplattformen (Google Ireland Limited) übermittelt werden. Mehr dazu in unserer Datenschutzerklärung.

Small AI handouts in everyday life

Apple's own AI is primarily used for small everyday tasks: when proofreading a text, it should be able to check spelling, sentence structure, grammar, wording and style, among other things. After highlighting, a small blue arrow appears next to the text, which opens a menu that can be used to select the desired function. Existing texts can also be reformulated differently in this way. The AI underlines proposed changes. These can be adopted either step by step or completely with a single tap.

What is striking is that while text editing is aimed more at works that are also used in a professional context. However, most of the image-generating functions of Apple's AI are more likely to be used in the private sphere and for entertainment purposes. Take Genmojis, for example: if you can't find what you're looking for in the emoji keyboard search field, you will be able to tap a button in iOS 18 and receive a customized image. This can be sent either as a real image or as a tapback in iMessage.

Just the beginning, but already an added value

(Image: Apple)

The Image Playground is similar: instead of stiff text-based prompts, the user throws building blocks to the AI via text field input, such as the term fireworks and bridge. Alternatively, a contact can also be selected from the address book. The user can then easily remove the words floating around the image as bubbles. The result is either comic-like or an illustration - but it never looks like a real photo, as other image generators do by default.

But this will only be the beginning when Apple Intelligence is released in US English in the fall. And even at the beginning, the functions of Apple Intelligence known so far, when they are probably also available in German in 2025, should significantly expand the possibilities on Apple devices.

(mki)