Blackwell: Nvidia unveils its next generation of AI accelerators

The Blackwell accelerator architecture is said to be up to twice as fast as AMD's MI300X and consolidate Nvidia's supremacy in AI chips for the coming years.

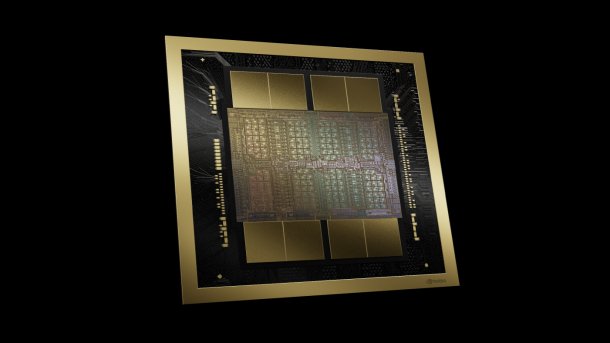

Nvidia's rendered B100 accelerator. What is barely visible here is that the accelerator consists of two chips and eight HBM3e stacks.

(Image: Nvidia)

Nvidia's in-house exhibition GTC 2024 in San José, California, took place in person for the first time in years and was unsurprisingly dominated by artificial intelligence. Nvidia currently dominates the data center market with its accelerator chips and is generating one record financial result after another in the wake of the AI boom.

Nvidia boss Huang wants it to stay that way. The newly unveiled Blackwell accelerator architecture plays a central role in this in the form of various products, ranging from the B100 to the DGX GB200 SuperPOD, which are to be launched on the market over the course of the year. On paper, Blackwell GPUs achieve twice the throughput of AMD's brand new MI300 accelerators for some data formats.

With the new Blackwell architecture and further reduced data formats such as 4-bit floating point as well as new functions, the focus is primarily on energy efficiency and data exchange between the individual chips. This is why the NVLink switch and the network technology have also been upgraded.

(Image: c't)

Nvidia traditionally does not name prices and refers to its partners, but as long as the AI hype continues unabated, the chips are likely to sell almost regardless of pricing. Nvidia has already won Amazon Web Services, Google Cloud and Oracle Cloud as customers, but does not specify exactly when instances will be bookable. Meanwhile, the older H100 products continue to roll off the production line.

Blackwell double chip

Nvidia is breaking new ground with Blackwell, but is remaining true to itself in some ways. The Blackwell "GPU" consists of two individual chips. Although Nvidia did not want to answer our question as to whether the two are functionally identical, it did state that both are at the limit of the exposure possibilities. This means that they should each be around 800 mm² in size and therefore take up about as much space individually as their predecessors, the H100 (814 mm²) and A100 (826 mm²). The word chiplet was not used, as this is usually understood to mean the coupling of different chips.

Nvidia has the Blackwell chips manufactured at TSMC in a process called "4NP", which does not correspond to the usual nomenclature of the forge. Nvidia did not answer whether this is a derivative of N4P and what characteristics the process has - however, we assume that it is N4P, in which a few parameters have been adjusted according to customer requirements as usual.

Both chips, which Nvidia only refers to together as the Blackwell GPU, are connected to each other via a fast interface with 10 TByte per second (5 TByte/s per direction). According to Nvidia, this is sufficient for them to behave like a single GPU in terms of performance. For comparison: We measure around 5 TByte per second for the level 2 cache as the data-connecting instance in Nvidia's high-end GeForce RTX 4090 graphics cards, while AMD's MI300 accelerator chips are connected to each other at up to 1.5 TByte/s.

192 GByte HBM3e memory

When it comes to memory, Nvidia goes all out and starts with eight 24 GByte stacks of the fast HBM3e memory. This adds up to a total of 192 GByte and 8 TByte/s transfer rate. In terms of memory size, the company is on a par with AMD's MI300X, but the B100 has a transfer rate that is around 50 percent higher – the upgrade was also necessary, as Nvidia's H100 generation was lagging behind in terms of memory size. With eight stacks, it is also theoretically possible to switch to 36 GByte stacks at a later date and increase capacity to 288 GByte.

There were only a few details about the internal structure at the presentation. Nvidia only provided performance data for the Tensor cores, but remained silent on traditional shader arithmetic units and other units.

For the presentation in the table, we have used the throughput values with sparsity; with densely populated matrices, the teraflops figures are halved. Exception: FP64, here Nvidia does not specify throughput with sparsity.

| Accelerators for data centres and AI | ||||

| Name | GB200 | AMD MI300X | H100 | A100 |

| Architecture | 1x Grace + 2x Blackwell | CDNA3 | Hopper | Ada |

| Transistors | 2x 104 billion. / 4NP / ~800 mm² | Various chiplets with 153 billion / N5 + N6 | 80 billion / 4N / 814 mm² | 54,2 billion / N7 / 826 mm² |

| Format | Add-on-Board (2 pro 1U-Rack) / SXM | SXM5 | SXM5 | SXM4 |

|

Year |

2024 | 2023 | 2022 | 2020 |

| TDP | 1200 watts per GPU + 300 watts Grace CPU | 750 watts |

700 watts |

400/500 watts |

|

Memory |

192 GByte HBM3e | 192 GByte HBM3 | 80 GByte HBM3 | 80 GByte HBM2E |

|

Transfer rate |

8 TByte/s | 5,3 TByte/s | 3,35 TByte/s | 2,04 TByte/s |

| GPU-GPU-connection | NVLink Gen 5, 1,8 TByte/s | 896 GByte/s | NVLink Gen 4, 900 GByte/s | NVLink Gen 3, 600 GByte/s |

| Computing power tensor core per GPU (TFLOPS, with sparsity) | ||||

| FP64 (Teraflops) | 45 | 163 | 67 | 19,5 |

| FP32 (Teraflops) | n.a. | 163 | n.a. | n.a. |

| TF32 (Teraflops) | 2500 | 1307 | 989 | 312 |

| BF16 (Teraflops) | 5000 | 2615 | 1979 | 624 |

| FP8 (Teraflops) | 10000 | 5230 | 3958 | n.a. (INT8: 1248 TOPS) |

| FP4 (Teraflops) | 20000 | n.a. | n.a. | n.a. |

| Computing power shader cores per GPU (Teraflops) | ||||

| FP64 (Teraflops) | n.a. | 81,7 | 33,5 | 9,7 |

| FP32 (Teraflops) | n.a. | 163,4 | 66,9 | 19,5 |

| BF16 (Teraflops) | n.a. | n.a. | 133,8 | 39 |

| FP16 (Teraflops) | n.a. | n.a. | 133,8 | 78 |

FP4 and FP6

One of the new features of Blackwell is support for a 4-bit floating point format (FP4) in the chip's transformer engine, for which there is a research paper in collaboration with an Nvidia employee. This is intended to store weights and activations in AI inferencing of Large Language Models (LLM) with only 4 bits. The authors claim "For the first time, our method can quantize both the weights and the activations in the LLaMA-13B model to only 4 bits and achieves an average score of 63.1 on the common-sense zero-shot reasoning tasks, which is only 5.8 points lower than the full-precision model and significantly outperforms the previous state of the art by 12.7 points." FP4 could therefore handle significantly less data with only a relatively small loss in the accuracy of the result, which in addition to the processing speed also doubles the possible model size.

(Image: c't)

During the keynote, Nvidia CEO Huang also revealed that FP6 is also an option. Although this format does not provide additional computing throughput compared to FP8, it saves memory, cache and register space and therefore also energy.

With a GPT model with 1.8 trillion parameters ("1.8T Params"), Nvidia aims to be 30 times faster than an H100-based system with a GB200 with a so-called Mixture of Experts, i.e. adapted computing and data accuracy, and to work 25 times more efficiently. Nvidia claims to have calculated the performance down to one GPU, but in reality compares a system with 8 HGX100 and 400G Infiniband interconnect with 18 GB200 superchips (NVL36).

But even with the established FP8 data format, Blackwell is on paper almost twice as fast as AMD's MI300X and more than 2.5 times as fast as its predecessor, the H100.