AMD Instinct MI: new products yearly, MI325X this year vs. Nvidia's Hopper

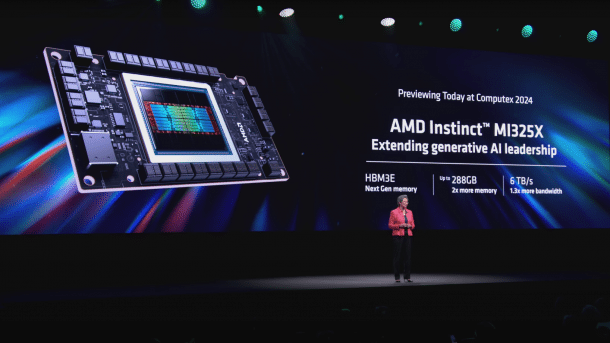

Barely on the market, the upgrade is in the starting blocks: AMD's Instinct MI325X delivers more and faster memory for huge AI models in data centers.

(Image: AMD)

It cannot be written often enough: The market for AI models is developing rapidly. That's why new developments or upgrades to existing accelerators for AI training and inferencing can be found everywhere.

AMD is therefore taking this logical step with its AI accelerator for data centers, the Instinct MI325X. The MI300 generation, which was just presented in December 2023, i.e., six months ago, now has much more memory capacity and a slightly higher memory transfer rate thanks to the move to more modern HBM3e stack memory. The MI325X fits into the same boards and rack slots as its predecessor, as AMD CEO Dr. Lisa Su promised in her Computex keynote. It should be able to run an AI model with a trillion parameters in a figure-of-eight network.

Compared to the MI300X, which AMD is currently selling like hotcakes, the MI325X has a 50 percent increase in memory capacity to a maximum of 288 GB. The jump in transfer rate from an already very high 5.3 to 6 TByte per second is significantly lower. The computing power remains unchanged with a peak clock rate of 2.1 GHz.

Compared to Nvidia's currently market-dominating but somewhat older Hopper chips, the increase is significantly greater. These are currently only available with 141 GByte HBM3e memory and a transfer rate of 4.8 TByte per second as H200. The original version H100 used even slower and less HBM3. The MI325X therefore has an advantage of around a factor of 2, or around 25 percent, if you can buy it.

The MI325X should be available from the fourth quarter of 2024, just under a year after the official launch of the MI300X and in a similar timeframe to Nvidia's Blackwell generation, which is also significantly faster than Hopper: So far, 192 GByte HBM3e with 8 TByte per second have been announced.

Annual AI cadence

In line with the rapid development of AI models, AMD intends to launch new products in the Instinct series every year from now on. The MI350 based on the new CDNA4 architecture will be launched in 2025. Like Nvidia's Blackwell generation, this will support the AI data types FP4 and FP6. These are floating-point formats with low precision and a small range of values, but they are sufficient for some tasks with AI models adapted to them. In the case of FP4, at least, they are 2 times faster than previous 8-bit data types; FP6 can at least help to save memory space and transfer rate in caches and registers.

(Image: AMD)

With these computing artists, AMD aims to be up to 35 times (sic!) as fast as the previous generation MI300X in customized AI applications. Nvidia announced similar math tricks at the presentation of the Blackwell series with a factor of 30 for a 1.8 trillion parameter model using Mixture of Experts, i.e., different accuracies in the individual stages of the model.

The MI350 will use 3-nanometer technology, AMD did not say which manufacturer directly, but emphasized in the subsequent Q&A session how satisfied they were with the cooperation with the Taiwanese chip manufacturer TSMC. Also on board: a maximum of 288 GByte HBM3e stack memory, as with the MI325X.

The MI400 series, which uses the ominously named "CDNA next" architecture, is then planned for 2026. So the guesswork enters a new round: what will come after CDNA4?

Empfohlener redaktioneller Inhalt

Mit Ihrer Zustimmmung wird hier ein externer Preisvergleich (heise Preisvergleich) geladen.

Ich bin damit einverstanden, dass mir externe Inhalte angezeigt werden. Damit können personenbezogene Daten an Drittplattformen (heise Preisvergleich) übermittelt werden. Mehr dazu in unserer Datenschutzerklärung.

(csp)