Reliability: economic researchers complain about market failure in generative AI

ZEW economists call for an EU program for the development of secure generative AI such as chatbots. Currently, OpenAI & Co. are shifting the social risks.

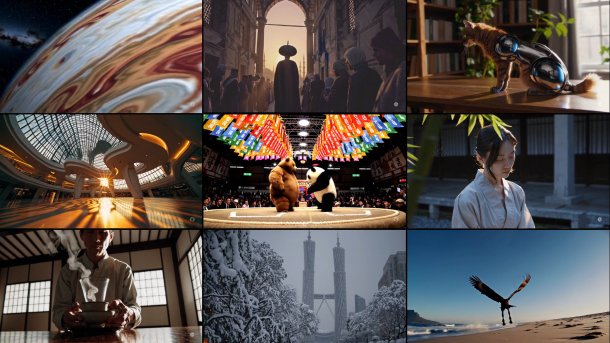

Homepage of the video AI Sora from Open AI.

(Image: Screenshot)

Politicians and experts everywhere are calling for the development of "trustworthy" artificial intelligence. However, according to researchers at the Leibniz Centre for European Economic Research (ZEW), appeals and regulations are not enough to bring the currently particularly popular chatbots and image generators from OpenAI, Google & Co. onto this track.

"We are observing a market failure in generative AI," criticizes Dominik Rehse, head of a ZEW research group for the design of digital markets. Developers could currently "generate considerable income through more powerful models". However, they do not bear the costs for their social risks.

To compensate for this imbalance, the ZEW economists propose a new EU funding program in a policy brief. It should explicitly create incentives for the development of safe generative AI.

"The safety of these systems is a socially desirable innovation that individual developer companies have not yet provided sufficiently," says Rehse, explaining the initiative. A similar problem exists in the production of some vaccines, for example, "where such funding programs have already been successfully applied".

The public should be able to test AI algorithms

The team's idea: Interested developers take part in a competition advertised and funded by the EU. Systems that meet certain safety and other performance criteria are to be developed in stages. If a milestone is reached, the relevant developers will receive predetermined amounts of money.

According to the researchers, this reduces demand uncertainties on the company side: The industry would know from the outset that the innovation would be worth the effort. At the same time, the EU does not specify concrete technologies, but remains open and sets few technical requirements.

Videos by heise

According to the group, however, "carefully defined safety and other performance indicators" are necessary. Their robustness is likely to result primarily from the use of reproducible tests, in which the public should also be able to scrutinize AI algorithms for misconduct or particularly good performance.

With such incentive mechanisms, the EU has the opportunity to "develop innovations in a more targeted manner than before", emphasizes co-author Sebastian Valet. "It could also occupy a niche in the market for generative AI, in which it has only played a minor role to date."

(olb)