Project Astra: Google's faster AI assistant has eyes, ears and memory

Combined with Gemini 2.0, Project Astra enables a universal, multimodal AI assistant for smartphones and smartglasses, but so far only on a trial basis.

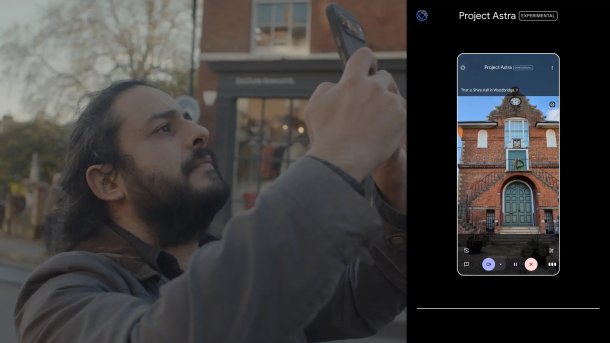

Project Astra for object recognition via smartphone

(Image: Google)

In addition to the new Gemini 2.0 AI model, Google has also announced progress with "Project Astra". This involves working on a universal, multimodal AI assistant in smartphones or smartglasses that can support the user both visually and verbally. In addition, the AI assistant can now memorize various things so that the user can use the system as a memory aid. However, Project Astra is currently only available to a limited group of testers.

The data company presented Project Astra at Google I/O in May 2024 with video AI, search AI and even more AI. Project Astra combines work on the AI assistant, which is also referred to as agents that act on behalf of users. These agents should be able to understand the world, remember things and act so that they are useful. The Gemini app was supposed to get some of these capabilities before the end of the year, and Google has now just about managed to do so, at least for some testers.

Videos by heise

Google itself still refers to Project Astra as a "research prototype to explore the future capabilities of a universal AI assistant". Since Google I/O, the company has continued to improve and expand this assistant together with selected test subjects. The AI assistant can now understand different languages and respond in these languages. This includes different accents and unusual words.

Longer memory and use of Google services

The assistant can now also remember things for longer. With Project Astra, Google speaks of a 10-minute memory during a session phase. At Google I/O, it was only 45 seconds. The assistant should also be able to remember more conversations in the past, making the use of artificial intelligence more personal. A shorter latency, which should enable the assistant to understand spoken questions and instructions as quickly as a human, will also help.

Empfohlener redaktioneller Inhalt

Mit Ihrer Zustimmung wird hier ein externes YouTube-Video (Google Ireland Limited) geladen.

Ich bin damit einverstanden, dass mir externe Inhalte angezeigt werden. Damit können personenbezogene Daten an Drittplattformen (Google Ireland Limited) übermittelt werden. Mehr dazu in unserer Datenschutzerklärung.

Project Astra is based on Google's new Gemini 2.0 AI model and can use various Google services such as search, Maps and Google Lens. The user can point the smartphone camera at an object and ask the AI assistant for background information about it. The Astra assistant can also apparently name different locations if the user asks it about bakeries on the way into the city center, for example.

AI assistant with limits

However, the AI assistant still has limitations. According to Axios, Project Astra cannot access emails and photos stored on the user's smartphone, and it struggles to distinguish between different voices in a noisy environment. Even simple tasks such as setting a wake-up call or timer are not possible, although the previous Google Assistant has been able to do this for a long time.

According to Google, it is still working on Project Astra and wants to integrate these functions into the Gemini app in future and bring them to other form factors such as smartglasses. The group of test users is being expanded. Interested parties can sign up to a waiting list to try out the AI assistant. However, this is limited to the USA and the UK, as Project Astra is only available there so far. An Android cell phone is also required; the AI assistant is currently not compatible with iPhones.

(fds)