Microsoft buys most Nvidia accelerators

Almost half a million Hopper GPUs went to Microsoft in 2024. Chinese companies are also at the forefront.

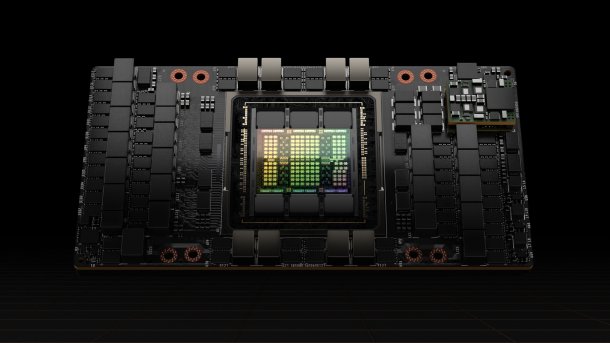

Nvidia's AI accelerator H100.

(Image: Nvidia)

Microsoft is by far Nvidia's largest customer for AI accelerators. According to an estimate by market observer Omdia, the company purchased 485,000 Hopper GPUs, i.e. H100 and H200, in 2024. It is unclear how many of the chips Microsoft uses itself and how many are intended for its partner OpenAI. The Omdia figures are published by the Financial Times.

Assuming a price of 30,000 US dollars per GPU, that would be almost 15 billion US dollars. Nvidia's annual profit from server products is expected to exceed 100 billion US dollars this year, but this also includes ARM processors, network cards and interconnect technology.

(Image: Financial Times)

Other companies lag behind

The Chinese companies Bytedance and Tencent, with around 230,000 Hopper GPUs each, are already lagging behind in second and third place among the largest Hopper buyers. They buy the slimmed-down versions H800 and H20 due to US export restrictions. The former initially came with a slower Nvlink interconnect; Nvidia added the H20 with half the computing power due to updated export restrictions.

Bytedance uses its AI data centers for AI algorithms in Tiktok, for example. Tencent owns countless Chinese and international companies. Among other things, Tencent operates WeChat in China, which integrates AI agents.

Videos by heise

Meta is said to have purchased 224,000 Hopper GPUs, as well as 173,000 Instinct MI300s, apparently AMD's largest customer. Microsoft is estimated to have purchased 96,000 Instinct MI300s. xAI, Amazon and Google round off the top 5 largest Nvidia Hopper customers, with 150,000 to 200,000 units each.

Home-grown in the millions

Amazon, Google and Meta are the most advanced when it comes to using their own AI accelerators. Google and Meta are each said to have put around 1.5 million of their own chips into operation; Google calls them Tensor Processing Units (TPU), Meta Training and Inference Accelerator (MTIA). Amazon has 1.3 million Trainium and Inferentia chips. Microsoft is lagging behind with 200,000 of its own Maia accelerators.

The self-developed types are all slower per chip than Nvidia's Hopper GPUs. Nvidia is currently ramping up production of the new Blackwell generation, which is significantly faster but also more expensive.

Empfohlener redaktioneller Inhalt

Mit Ihrer Zustimmung wird hier ein externer Preisvergleich (heise Preisvergleich) geladen.

Ich bin damit einverstanden, dass mir externe Inhalte angezeigt werden. Damit können personenbezogene Daten an Drittplattformen (heise Preisvergleich) übermittelt werden. Mehr dazu in unserer Datenschutzerklärung.

(mma)