DeepSeek: V3 development is said to have been much more expensive

The development of DeepSeek-V3 was probably much more expensive than suggested. The company is said to have access to 60,000 GPUs.

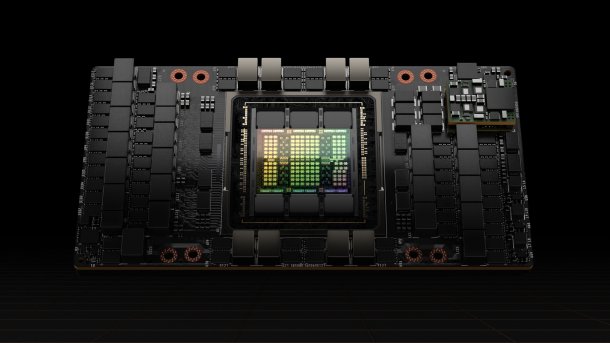

Although the USA prohibits the sale of the H100 accelerator on display to China, DeepSeek is said to have obtained 10,000 units via imports.

(Image: Nvidia)

DeepSeek is said to have access to tens of thousands of GPU accelerators for the development of its own AI models, including H100 GPUs, which fall under the US export bans. The reported costs of just under 5.6 million US dollars for DeepSeek v3 probably only represent a small part of the total bill.

In the paper on the V3 model, DeepSeek writes of a comparatively small data center with 2048 H800 accelerators from Nvidia. The company calculates hypothetical rental costs of 2 US dollars per hour and H800 GPU. With a total of just under 2.8 million computing hours (distributed across 2048 GPUs), this comes to 5.6 million US dollars.

However, the developers themselves cite a caveat: "Please note that the above costs only include the official training of DeepSeek-V3 and not the costs associated with previous research and ablation experiments on architectures, algorithms or data."

50,000 hopper and 10,000 amp accelerators

Semianalysis has looked at a realistic cost breakdown. According to the analysts, DeepSeek has access to about 60,000 Nvidia accelerators through its parent company High-Flyer: 10,000 A100s from the Ampere generation before the US export restrictions came into effect, 10,000 H100s from the gray market, 10,000 H800s customized for China, and 30,000 H20s that Nvidia launched after more recent export restrictions.

Scale AI CEO Alexandr Wang recently said in an interview with CNBC that DeepSeek would use 50,000 H100 accelerators (from minute 2:40). This could be a misunderstanding: The H100, H800 and H20 (together supposedly 50,000) are all models from the Hopper generation, but in different versions.

The H100 is the standard model for the West. With the H800, Nvidia slowed down the Nvlink for communication between multiple GPUs due to export restrictions. The H20 followed due to new restrictions with greatly reduced computing power but unthrottled Nvlink. It also uses the maximum possible memory expansion with 96 GByte High-Bandwidth Memory (HBM3) and a transfer rate of 4 TByte/s.

Videos by heise

High excluded development costs

Semianalysis calculates that the servers required for the 60,000 GPUs cost around 1.6 billion US dollars. The operating costs are on top of that. This does not include the salaries of the development teams.

According to DeepSeek, 96 percent of the 5.6 million US dollars quoted is for pre-training. This involves training the final underlying model. The paper ignores the previous development effort, including all the innovations incorporated into DeepSeek V2.

The development of the Multi-Head Latent Attention (MLA) caching technique alone is said to have taken months. The AI model compresses all generated tokens so that it can access the data quickly for new queries without taking up a lot of memory.

A second innovation is also likely to have taken up some resources: "Dual Pipe". DeepSeek uses part of the streaming multiprocessors (SMs) in Nvidia's GPUs as a kind of virtual data processing unit (DPU), as Nextplatform points out. They take care of data movement in and between the AI accelerators – independently of the processor, with much lower latency than when using CPUs, which increases efficiency.

In the paper on the more powerful R1 model, DeepSeek does not provide any information on the hardware used. The use of a small data center would be even more implausible here. Based on an X article, there have recently been increasing reports that DeepSeek could also use AI accelerators from Huawei for R1.

Empfohlener redaktioneller Inhalt

Mit Ihrer Zustimmung wird hier ein externer Preisvergleich (heise Preisvergleich) geladen.

Ich bin damit einverstanden, dass mir externe Inhalte angezeigt werden. Damit können personenbezogene Daten an Drittplattformen (heise Preisvergleich) übermittelt werden. Mehr dazu in unserer Datenschutzerklärung.

(mma)