Nvidia boss comments on DeepSeek sell-off for the first time

Investors have misinterpreted DeepSeek's achievement, says Nvidia boss Jensen Huang. Strong hardware is still required for post-training.

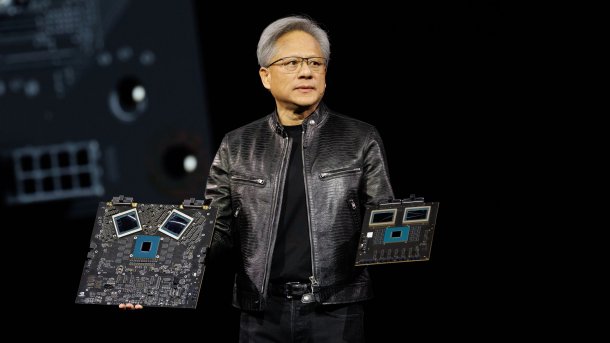

(Image: Nvidia)

Nvidia's CEO Jensen Huang speaks for the first time about the temporary share sell-off following the presentation of new DeepSeek AI models. In January 2025, headlines made the rounds that new training mechanisms could significantly reduce the need for AI accelerators. As a result, Nvidia's stock market capitalization fell by over 600 billion US dollars – but the company has since returned to growth.

In a video interview, Huang says (from minute 58:00) that investors misjudged the implications: "From an investor's perspective, there was a mental model that the world consisted of pre-training and then inference. And inference meant that you ask an AI a question and it immediately gives you an answer. I don't know whose fault that is, but obviously that paradigm is wrong."

Post-training most important according to Huang

Pre-training is the training of a basic model that can answer basic questions. In the case of DeepSeek, this is the AI model DeepSeek-V3. "But the most important part of intelligence, where you learn to solve problems", according to Huang, is the so-called post-training – behind this are mechanisms to make an AI model more powerful.

Different variants of reinforcement learning are currently in widespread use: the building model is given different answer options and learns from third parties which of the answers are the best. In the case of DeepSeek, two AI models played their questions and answers back and forth, resulting in the DeepSeek-R1 reasoning model.

Videos by heise

DeepSeek only provided information about the hardware used for the V3 model. However, there are also indications that much more powerful servers were used in the background. The developers are silent on the accelerators used for R1 training, which supports Huang's argument.

(mma)