DeepMind's AI "Genie 2" creates complex interactive 3D worlds from single image

Google DeepMind has presented "Genie 2", which generates interactive 3D environments from individual images. It is intended to be used for training AI agents.

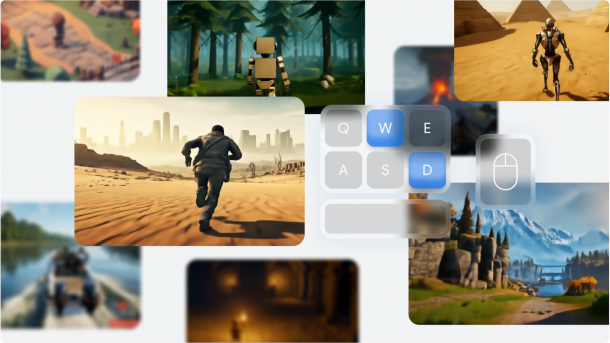

Examples of 3D worlds from Deepmind's "Genie 2"

(Image: Google Deepmind)

DeepMind has unveiled "Genie 2", the next generation of its artificial intelligence for creating game worlds. This so-called "Foundation World Model" should be able to generate a large number of complex, three-dimensional environments from a single image, in which AI agents can then be interactively trained and tested.

The 3D worlds generated by Genie 2 can be explored by both humans and AI agents using a keyboard and mouse. The system demonstrates various advanced capabilities in the demo videos presented on the project page: It models physical effects such as gravity, smoke and water reflections, maintains the consistency of the environment and can even simulate the behavior of computer-controlled characters (NPCs).

Empfohlener redaktioneller Inhalt

Mit Ihrer Zustimmung wird hier ein externes Video (TargetVideo GmbH) geladen.

Ich bin damit einverstanden, dass mir externe Inhalte angezeigt werden. Damit können personenbezogene Daten an Drittplattformen (TargetVideo GmbH) übermittelt werden. Mehr dazu in unserer Datenschutzerklärung.

Genie 2 is technically an autoregressive, latent diffusion model that the team has trained with a large video dataset, explains Jack Parker-Holder's research team. The system can consistently maintain the generated worlds for up to one minute, with most examples on the website lasting 10 to 20 seconds.

(Image: Deepmind)

From 2D to complex 3D worlds

The progress over its predecessor is significant. The "Genie" presented in March was limited to 2D platform games in the style of Super Mario Bros. The model at that time was trained exclusively with video material (30,000 hours from hundreds of games) and without pre-marked input actions. However, the system still ran very slowly, with only one frame per second.

According to Deepmind, an unoptimized version of Genie 2 is already running in real time with reduced quality. This is reminiscent of earlier experiments such as Google's GameNGen, which was able to simulate the shooter "Doom" without an engine –, although this system was limited to a specific game.

Tool for AI training

One of the main goals of Genie 2 is the training of AI agents. Deepmind demonstrates this with its SIMA agent (Scalable Instructable Multiworld Agent), which can execute instructions in the generated environments.

With Genie 2, the research team hopes to solve a structural problem in the training of intelligent virtual agents (embodied agents) and achieve the breadth and generality required for progress towards Artificial General Intelligence (AGI).

But until then, Deepmind still has a few hurdles to overcome: The quality of the output is said to fluctuate considerably at times, and the consistency of the virtual environments must be further improved for longer interactions.

(vza)