Image generator from GPT-4o: what is probably behind the technical breakthrough

The image generator from GPT-4o impresses with its quality and precise text integration. But what makes it different from other models? An attempt to explain.

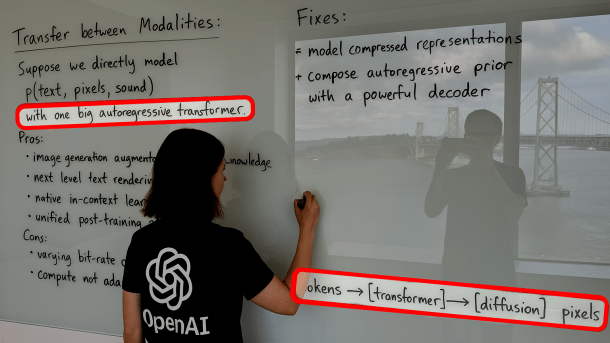

"Easter Egg": OpenAI gives more or less subtle hints on the website about how image generation with GPT-4o works.

(Image: OpenAI / Hervorhebung heise online)

GPT-4o's AI image generator produces amazingly high-quality, consistent images that even contain (mostly) correct and legible text – - an ability that previous AI models often failed to achieve. Numerous examples, which have been circulating all over the Internet for days, impressively demonstrate the leap in quality. GPT-4o is particularly strong at analyzing uploaded reference images and transferring them into a different style or integrating them into images. But what does OpenAI do differently with GPT-4o than, say, Midjourney or Dall-E to achieve this?

(Image: Foto, Verfremdungen erzeugt mit GPT-4o durch heise online)

In the following, we try to derive plausible hypotheses about how the GPT-4o image generator works from the sparse information provided by OpenAI so far, comparisons with other models and our observations. It should be emphasized that this is an analysis based on assumptions and observations.

Similar capabilities were demonstrated by LlamaGen, for example, and before that by Google's “Pathways Autoregressive Text-to-Image Model” (Parti) from 2022 –, albeit at a qualitatively lower level of quality. Parti was designed to “generate high-quality photorealistic images and depict complex, content-rich scenes that also incorporate extensive world knowledge”.

The description of the image generation capabilities on the OpenAI website reads strikingly similar: “Native image generation allows GPT-4o to seamlessly link its knowledge between text and image. The result is a model that works smarter and more efficiently.”

And indeed, according to OpenAI, GPT-4o also uses an “autoregressive model” (AR model) and thus works fundamentally differently from Midjourney or Dall-E, where a diffusion model generates the images.

However, OpenAI appears to have developed the approach significantly further compared to LlamaGen or Parti and optimized several key capabilities. For example, GPT-4o promises the precise placement of up to 20 objects in a single image, including detailed control over their properties and relationships. This is considerably more than previous image generators, which typically achieve a maximum of half of this.

If you observe GPT-4o during image generation, you will recognize two phases: First, a coarse image structure is quickly created from noise – Midjourney and others also start similarly. In the second phase, however, the final image is built up precisely line by line from top to bottom (“adding details”), whereby, unlike in other models, each pixel generated in this step is already calculated and placed.

Autoregressive image generation

But how does the autoregressive approach differ from diffusion models in image generation? Diffusion models are based on a process of step-by-step denoising, starting with a completely noisy image. Iterative denoising steps described by stochastic differential equations are applied to approximate the original data distribution. During training with such series generated from countless original images, the diffusion model successively learns to generate realistic motifs in all possible styles from pure noise.

AR models, on the other hand, generate images by dividing them into many small image sections, so-called tokens. Anyone who has ever worked with language models will be familiar with these tokens as word components. In this case, however, each token represents a small area of the entire image, for example a block of 8 × 8 or 16 × 16 pixels.

A crucial question here is: What exactly are these “tokens” in the case of images? Unlike words in a sentence, images are continuous grids of pixels – so there is no obvious discrete vocabulary. GPT-4o presumably solves this problem by using a special image tokenizer, often a vector quantized autoencoder (VQ-VAE). This compresses the image into a sequence of discrete codes. However, Zhiyuan Yan et al speculate that OpenAI may use continuous tokens instead of discrete tokens. They base their assumption on the fact that, according to previous experience, VQ-VAE could impair the ability to semantically capture image content and display it precisely, but that GPT-4o has taken a big step forward in this field compared to other models.

Unlike vector quantization, which uses discrete codebooks to represent image content, continuous tokens enable a finer and more accurate representation of data as they are not restricted to a limited number of discrete states.

Regardless of whether discrete or continuous, tokenization creates the foundation for image generation by abstracting motifs of all kinds into a higher, semantic representation. Instead of generating raw pixels, which would be slow and inefficient, the model generates these token indices. A separate decoder network then translates the generated token sequence back into a coherent image.

Stepwise generation as conditional probabilities

An autoregressive algorithm generates an image as a product of conditional probabilities of tokens. Thus, if we consider an image as a sequence of tokens z1, z2, ..., zN, an AR model models the joint probability P(z1, z2, ..., zN) as the product of conditional probabilities using the chain rule:

P(z1, z2, ..., zN) = P(z1) × P(z2|z1) × P(z3|z1, z2) × ... × P(zN|z1, z2, ..., zN-1)

In other words, the model learns the probability of predicting each token based on all previously generated tokens. The process typically starts with a text description, from which the first matching image token is predicted. Further tokens are then determined based on all previous tokens until the entire image is finally assembled.

During training, GPT-4o receives numerous sequences of image tokens, often together with text descriptions or other conditioning data. In doing so, the model effectively learns to predict the next token in a sequence.

Tokenization has several advantages: it drastically reduces the sequence length compared to pixel-by-pixel generation, filters out high-frequency pixel noise and allows the model to work with more complex and abstract concepts, such as “a patch of blue sky” or “a fragment of a horse's mane”. A much more detailed explanation can be found in the Deep Dive by Joseph V. Thomas on Medium.

Videos by heise

Does GPT-4o use a pure AR model for image generation?

Even though Thomas, like OpenAI, speaks exclusively of autoregressive generation in the context of GPT-4o's image generation, Yan et al. speculate that the architecture could have a “diffusion head”. Here, the AR model generates a sequence of visual tokens that serve as input to the diffusion model, which decodes the final image.

They argue that outstanding image quality, texture diversity and the ability to create natural scenes are typical characteristics of diffusion models. They also cite an “Easter egg” that can be found on the OpenAI website on image generation capabilities. There you can see a panel in the background of an image that speaks of “one big autoregressive transformer”, but shows the process “Token ⇾ [Transformer] ⇾ [Diffusion] Pixel” at the bottom right (see cover image of this article at the top).

To support their thesis, they trained their own classification model to distinguish between images originating from AR and diffusion models. This model consistently classified the images generated by GPT-4o as diffusion-based. However, it is also conceivable that OpenAI was able to transfer these typical positive characteristics of diffusion models to AR technology.

Yan et al. developed the benchmark GPT-ImgEval for their investigations of GPT-4o, in which GPT-4o outperformed all “classic” image generators such as Stable Diffusion (1.5, 2.1, XL, 3) and Dall-E 2 as well as the LLM-supported AR models, such as LlamaGen, Janus(flow) and GoT.

Not free of weaknesses

As impressive as the GPT-4o images are, the model is not without its weaknesses. For example, it tends to oversharpen images. Using the brush tool for local image editing sometimes results in global changes, as the entire image is regenerated and not just the part to be edited. Overall, GPT-4o tends to have warm color tones, and in the case of motifs with several people, GPT-4o also makes typical mistakes such as awkward poses and unrealistic object overlaps. Fonts with characters outside the Latin writing system are often displayed incorrectly in the images.

Beispiele: Bilderzeugung mit GPT-4o (7 Bilder)

Erzeugt mit GPT-4o durch heise online

)(vza)