Google: Private AI Compute to Combine Cloud AI with Data Protection

With Private AI Compute, Google makes a big data protection promise: powerful cloud AI can be used without data leakage.

(Image: heise medien)

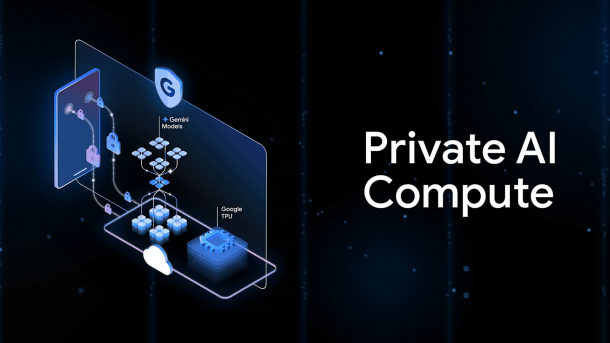

Google has introduced Private AI Compute, a new cloud-based AI processing platform that utilizes larger Gemini models while aiming to protect user privacy. The system combines the computing power of cloud servers with security mechanisms previously reserved for local on-device processing.

Background: While on-device AI models like Gemini Nano run on smartphones' Neural Processing Units (NPUs), allowing them to process user data locally, they reach their limits with more complex tasks. However, more powerful cloud models require the transfer of user data to Google servers – a privacy issue that Private AI Compute aims to solve.

The concept is reminiscent of Apple's Private Cloud Compute, which Apple announced in June 2024. Apple also relies on a combination of local and cloud-based AI processing, where sensitive data is to be processed in a protected cloud environment.

Titanium Intelligence Enclaves as Security Anchor

Technically, Private AI Compute is based on a single integrated Google stack with its Tensor Processing Units (TPUs). The core of the security architecture consists of Titanium Intelligence Enclaves (TIE) – hardware-based secure enclaves designed to isolate user data. According to Google, remote attestation and end-to-end encryption are used to connect devices to the sealed cloud environment.

Videos by heise

The system is intended to ensure that sensitive data is processed exclusively within the protected enclave and is inaccessible to Google or third parties. The architecture is based on Google's Secure AI Framework and the company's own AI Principles and Privacy Principles. Google emphasizes that Private AI Compute can process the same type of sensitive information that users would expect from local on-device processing.

The multi-layer approach includes several security levels: data remains encrypted while being transferred between the device and the cloud. Within the Titanium enclaves, processing is carried out by Gemini models in an isolated environment. Remote attestation allows the device to verify that it is indeed communicating with a trusted Private AI Compute instance.

First Applications on Pixel 10

In practice, Private AI Compute will initially be used in two features on Pixel 10 smartphones. The Magic Cue function, which generates context-based suggestions based on screen content, is promised by Google to become “even more helpful” through cloud connectivity.

The Recorder app also benefits from the new infrastructure: transcription summaries are now available in more languages. Google uses the cloud models to extend the limited capabilities of on-device AI without, as Google promises, compromising data protection.

Despite the data protection promises, local AI processing remains superior in some aspects: NPUs offer lower latency as no data transfer is required. Furthermore, on-device features function reliably even without an internet connection. Google sees Private AI Compute as a hybrid approach that switches between local and cloud-based processing depending on the requirements.

However, the robustness of Private AI Compute's security mechanisms in practice remains to be seen through independent security analyses. Google has published a Technical Brief containing further technical details about the architecture. More AI features with Private AI Compute connectivity are expected to follow soon.

(fo)