MLPerf Inference 4.1: Nvidia B200, AMD MI300X and Granite Rapids make debut

Nvidia's Blackwell leaves the competition behind, AMD's MI300X makes a good debut and Intel's Xeon 6980P and AMD's Epyc Turin also make a tentative appearance.

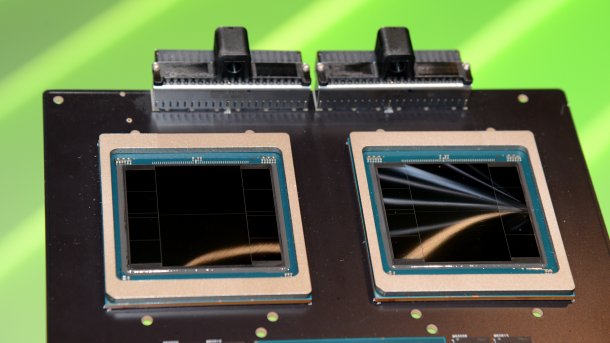

(Image: c't / csp)

The benchmark consortium MLCommons publishes the results of the MLPerf Inference benchmark suite. Unlike large-scale AI training, where some submissions run with thousands of accelerators simultaneously, inferencing is about individual machines with comparatively few accelerators, usually up to eight. They compete in various tests, from the rather grayed-out resnet-50 to larger AI models such as Stable Diffusion XL via Llama2-70b and the new Mixtral 8x7b.

In round 4.1 of the Inference ranking, AMD's Instinct MI300X accelerator, Nvidia's Blackwell B200, Intel's Xeon 6980P Granite Rapids and – Google's TPU v6 are competing for the first time, as they can only be rented rather than purchased. However, in very different categories. For the TPU v6e, for example, Google only provided values in Stable Diffusion XL, where it was around 3x as fast as the predecessor TPU v5, but could only keep up with Nvidia's H100 PCIe 80GB, but not the H100 in the SXM version.

Videos by heise

AMD MI300X roughly on a par with Nvidia H100

The premiere of AMD's MI300X accelerators in the MLPer was long awaited, and now there are four results – submitted by AMD (3x) and Dell (1x). However, AMD and Dell only competed in the Llama2-70b categories: With the older AI models, no advantages were probably seen for the accelerators, with the new Mixtral 8X7b the optimizations were most likely not yet complete. AMD's Director of GPU Product Marketing, Mahesh Balasubramanian, said in advance that the company was pleased to finally be involved and that the newer generative AI models Llama2-70b, Stable Diffusion XL and Mixtral-7X8B in particular were representative of the industry's concerns.

One of the AMD systems already used the upcoming Epyc Turin (Zen 5) processors as a substructure for the eight MI300X accelerators, and was also promptly the fastest of the four submissions. All MI300Xs each have 192 GB of HBM3 memory and were configured with the regular 750 watts.

The results of the MI300X still have room for improvement compared to Nvidia's Grace Hopper GH200 (144 GB). The MI300X achieved just under two thirds of Nvidia's performance in the server evaluations and three quarters in the offline evaluation. The comparison with the only conventional H200 is somewhat unfair, as its SXM module was equipped with special cooling and was allowed to consume 1000 watts. AMD was 40 or 32 percent behind against this submission with the number 4.1-0045.

(Image: c't / Carsten Spille)

The situation was similar for AMD's MI300X in the systems with eight accelerators: Between 72 and 75 percent of the Nvidia H200-SXM (141 GBytes) were in, against the 1-kW H200 it was 69 and 72 percent for offline and server scores. Compared to Nvidia's H100 configurations with 80 GB of memory, which currently still account for the majority of Hopper accelerators in use, the MI300X is on a par in the offline ranking and 5 percent behind in the server ranking. Based on the progress that Nvidia, among others, has successively made in the MLPerf results, this can certainly be made up for.

Exciting: AMD also submitted a configuration with only one MI300X, so that an assessment of the scalability is possible. Due to the larger total memory, this is even slightly above the ideal scaling of pure computing power in the server evaluations with its latency specifications with a factor of 8.34, and only just below it in the offline evaluations with 7.68.

Nvidia Blackwell B200: Frenziedly fast trickery

Nvidia's Blackwell accelerator B200, which was presented at GTC 2024, also makes its first public appearance in the Llama2-70B benchmark in MLPerf Inference 4.1; Nvidia did not submit any other results for the preview system (4.1-0074). Blackwell was 2.56 times faster in the server evaluation and 2.51 times faster in offline mode compared to the H200-SXM, which is also operated with 1000 watts. Compared to the GH200 superchip, the factors rose to 2.77 and 2.76 respectively.

For the first time, Nvidia is publicly using the Quasar quantization system, which can break down individual computing steps to FP4 accuracy using the Blackwell transformer engine, thus saving not only computing time but also memory space. Both Nvidia and MLCommons emphasized that the specifications for the accuracy of the results were adhered to.

Nvidia does the math a little more nicely and breaks down the B200 performance to a single GPU performance not specified in the MLPerf compared to the older H100 with 80 GB (submission 4.1.-0043). This achieves advertising-effective factors of 4 and 3.7 for servers and offline respectively.

Intel Xeon 6900 AP Granite Rapids

The preview entry 4.1-0073 from Intel uses the Xeon 6980P from the Granite Rapids series, which has not yet been officially launched. The Xeon 6980P runs on a server from the Avenue City platform and is not supported by accelerators, so it is solely responsible for the results.

Compared to the dual Xeon Platinum 8592+, the top models of the current generation, they are slightly more than twice as fast in the retinanet server evaluation and almost twice as fast in the offline evaluation of retinanet and GPTj-99. But they also beat the previous generation, which were submitted by SuperMicro and Quanta in addition to Intel, by at least 70 percent in the other tests.

However, a 1:1 comparison with AMD's next-gen Epyc processors is still pending: The Turin CPUs are represented in MLPerf Inference 4.1, but only as engines for MI300X accelerators.

Background MLPerf Training and Inferencing

The MLPerf releases run like competitions in two rounds per year: Training and Inferencing. Competing manufacturers can choose from different benchmarks for training or inferencing AI models, but can also submit results for several benchmarks or all of them.

Similar to scientific publications, all results are subject to a peer review process and are available to MLCommons members for validation in advance. The chance of getting away with faked or embellished fantasy values is therefore low. The results are divided into "offline" and "server" results. Offline results are generally slightly higher, but server results must meet latency requirements, i.e. return the first results to the user within a specified time. This eliminates some optimization options that can ensure higher throughput for offline results.

There are different categories such as Data Center and Edge, both in the "closed" and "open" versions. The open category allows optimizations and adapted AI models. In the closed category, the results must meet a minimum accuracy requirement set by MLCommons. In the open category, the rules are more lax, but both the model variants used and the accuracy achieved must be specified.

(Image: MLCommons)

The hardware is also divided into "available" and "preview". Available systems must be on the market when the benchmark is published, while those with a preview label must make it onto the market by the next round of MLPerf.

(csp)